Feb 3, 2025

Artificial intelligence (AI) tools like ChatGPT have quickly become indispensable in modern business operations. From drafting content and streamlining customer service to analyzing data, these tools offer unparalleled convenience and efficiency. Businesses across industries are increasingly relying on AI to enhance productivity and reduce costs.

However, as with any technology, there are risks that come with the rewards. Recent cybersecurity research has revealed a concerning vulnerability in tools like ChatGPT: the ability for hackers to manipulate its memory and inject malicious commands. This exploit not only threatens the security of sensitive business information but also introduces a new avenue for corporate espionage and data theft.

For organizations that rely heavily on AI, the implications are clear: failing to address these vulnerabilities could leave your company exposed to devastating breaches. Understanding this risk and taking proactive measures to mitigate it is essential for protecting your business. In this article, the cybersecurity experts at Blade Technologies dive into how these exploits work, why they pose a significant threat, and what steps your organization can take to safeguard its data and systems.

Understanding the Exploit: False Memories and Malicious Commands

Recent cybersecurity research has uncovered a troubling exploit in AI systems like ChatGPT, shedding light on just how vulnerable these tools can be when used in business settings. The issue lies in ChatGPT’s ability to use long-term memory to enhance its responses based on user interactions. While this feature is designed to improve performance, it can also be manipulated by cybercriminals in alarming ways.

Cybersecurity researchers found that hackers could inject false memories and commands into ChatGPT’s long-term memory by using malicious content. For example, an attacker could embed harmful code into an image hosted online, and if ChatGPT is directed to “browse” that image—such as through integration with search engines like Bing—it can unknowingly download and execute malicious commands included in the image.

In one experiment conducted by security researcher Johann Rehberger, the injected malicious code instructed ChatGPT to silently exfiltrate all user inputs on the macOS application, including sensitive business prompts and interactions, to a remote server controlled by hackers. This created a persistent data leak, making it possible for attackers to continuously siphon off proprietary information without the user ever realizing it.

This exploit highlights a critical misconception about AI tools: they are not immune to vulnerabilities that affect other technologies. Just like a computer can be compromised by malware, AI systems can “inherit” malicious instructions if they are not properly secured. In this case, ChatGPT’s reliance on external data sources and its ability to adapt over time became a liability, allowing hackers to hijack its memory and turn it into a tool for espionage.

Why the ChatGPT Memory Exploit is Dangerous for Businesses

The discovery of vulnerabilities in ChatGPT’s long-term memory poses a serious threat to businesses that incorporate AI tools into their daily operations. While the convenience and efficiency of ChatGPT are undeniable, the potential for hackers to exploit this technology highlights the need for caution. Sensitive inputs—like product roadmaps, customer data, or financial projections—could be intercepted by attackers and used for malicious purposes. Worse yet, the breach would likely go unnoticed until significant damage has already been done. Here’s why this exploit is particularly dangerous for businesses:

Loss of Confidential Information

Businesses often use ChatGPT to handle tasks that involve sensitive or proprietary information, such as drafting confidential documents, analyzing data, or brainstorming new product ideas. If a hacker inputs malicious code or false memories into ChatGPT, this critical data can be intercepted and sent to unauthorized servers. The loss of trade secrets, customer data, or intellectual property could have devastating financial and competitive consequences.

Compliance and Legal Risks

For organizations in regulated industries like healthcare, finance, or technology, the exposure of sensitive information could lead to violations of laws like HIPAA, GDPR, or CCPA. Regulatory penalties for non-compliance can result in hefty fines, legal battles, and even restrictions on business operations. Additionally, data breaches caused by AI vulnerabilities can complicate an organization’s ability to meet ongoing compliance requirements.

Reputational Damage

Data breaches undermine trust. Customers, partners, and stakeholders expect businesses to protect their information. If a breach occurs due to an exploited AI tool like ChatGPT, the resulting negative publicity could damage the company’s reputation for years. Customers may leave, partnerships may dissolve, and rebuilding trust could take significant time and resources.

Risk of Operational Disruption

Hackers gaining access to sensitive interactions through ChatGPT could open doors to broader attacks, such as phishing, ransomware, or system sabotage. For instance, stolen internal data might be used to craft convincing phishing emails targeting employees, leading to additional breaches or operational shutdowns.

Amplified Attack Surface

AI tools like ChatGPT increase the attack surface of businesses. Every interaction with the tool is a potential vulnerability if it’s not properly secured, and because it is a third-party application, it is often more difficult to prepare cybersecurity strategies for. Hackers exploiting the memory feature of ChatGPT could even leverage AI responses to guide further attacks or identify other weaknesses in the company’s defenses.

How Businesses Can Protect Themselves from False Memory Hacks in ChatGPT

The risks associated with ChatGPT’s vulnerabilities make it essential for businesses to take proactive measures to secure their data and operations. Hackers are constantly developing new ways to exploit emerging technologies, and AI tools like ChatGPT are no exception. Businesses must recognize that leveraging AI responsibly requires a proactive approach to security. By implementing a combination of technology, policies, and employee training, organizations can mitigate these threats and safely leverage AI tools. Here’s how:

1. Implement Network Monitoring Solutions

Network monitoring is a critical line of defense against data breaches caused by exploits like injecting false memories into ChatGPT. By continuously analyzing data flows and identifying unusual patterns, network monitoring tools can detect and stop unauthorized data exfiltration in real-time. Blade Technologies specializes in providing businesses with comprehensive network monitoring solutions, ensuring that potential exploits are detected and neutralized before they cause harm. This includes:

- Detecting Anomalous Activity: Advanced monitoring solutions flag unexpected outbound data transfers or suspicious communication with unknown servers.

- Protecting Against Persistent Threats: Tools like intrusion detection systems (IDS) and Security Information and Event Management (SIEM) platforms can identify and isolate malicious activity related to AI vulnerabilities.

- Ensuring Incident Response Readiness: Proactive monitoring enables faster incident response to breaches, minimizing damage and downtime.

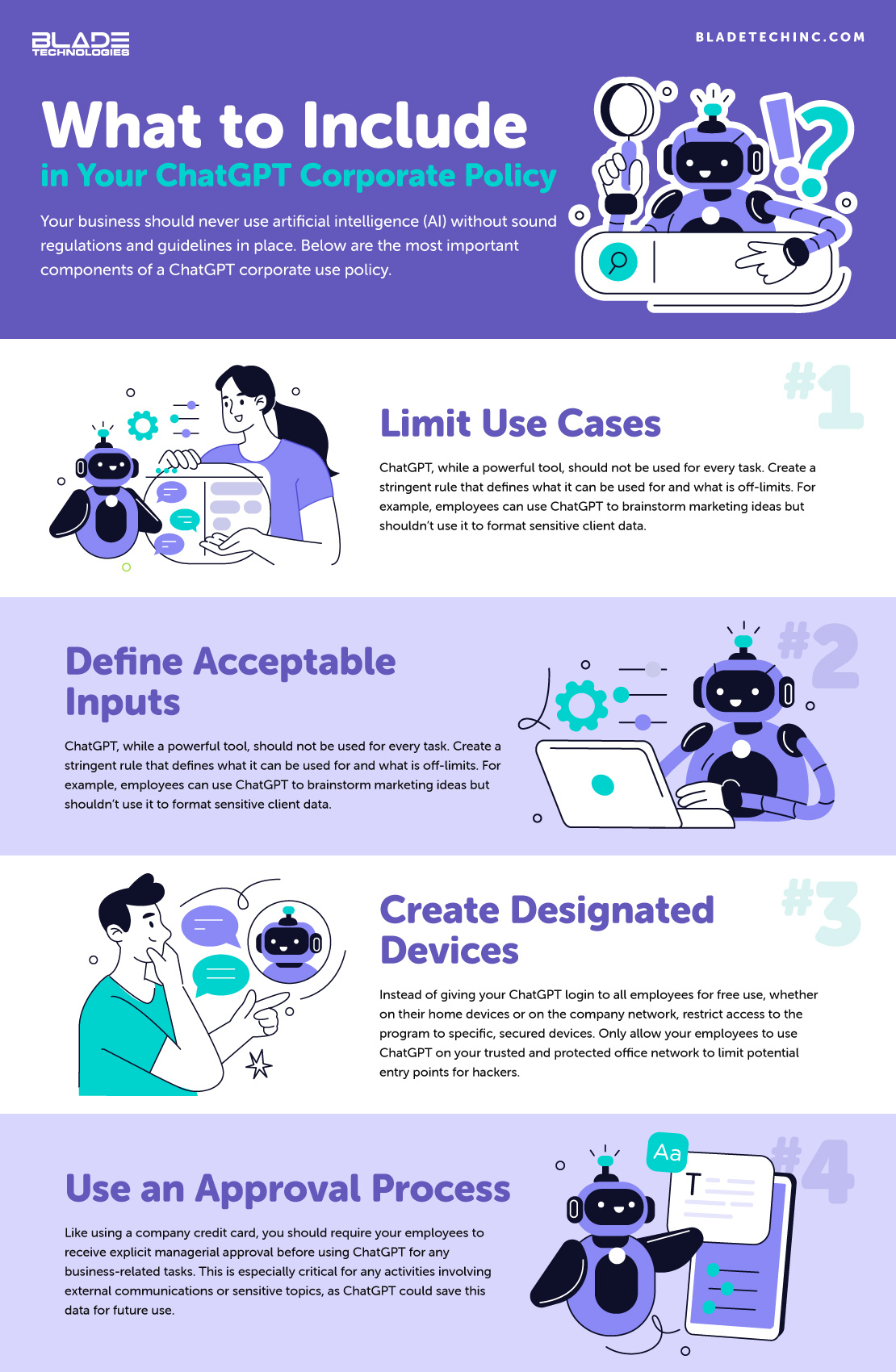

2. Create a Corporate Policy on ChatGPT Usage

To minimize risks, businesses should establish clear guidelines for how employees can use AI tools like ChatGPT. A detailed policy can prevent the accidental exposure of sensitive information and set boundaries for appropriate usage. Having a corporate policy ensures that all employees are on the same page about the risks and responsibilities of using AI tools. Key components of this policy include:

- Restricted Use Cases: Define which tasks ChatGPT can be used for, emphasizing non-sensitive and non-critical functions. For example, employees could use the tool to draft generic communications or brainstorm ideas but avoid inputting confidential data.

- Prohibited Inputs: Ban the use of proprietary information, customer data, passwords, or financial details in prompts.

- Designated Devices: Restrict access to ChatGPT to specific, secured devices to limit potential entry points for hackers.

- Approval Processes: Require managerial approval before using ChatGPT for any business-related tasks, particularly those involving external communication or sensitive topics.

3. Train Employees on Cybersecurity Best Practices

Employees play a crucial role in preventing breaches, especially when using tools like ChatGPT. Ongoing training keeps employees aware of evolving cybersecurity threats and reinforces good practices. Training programs should focus on:

- Recognizing Risks: Educate staff about the vulnerabilities of AI tools and the potential consequences of mishandling them.

- Avoiding Suspicious Content: Teach employees to identify potentially malicious prompts, links, or requests involving AI tools.

- Following Usage Policies: Ensure employees understand and adhere to the company’s AI usage guidelines.

- Reporting Incidents: Encourage prompt reporting of any suspicious activity related to AI tools, whether it’s unusual responses from ChatGPT or unexpected network behavior.

- Check ChatGPT Memory: Have employees check ChatGPT’s memory feature on a regular basis to ensure that all information stored in the application is not confidential or sensitive.

4. Evaluate AI Vendors for Security Standards

Before adopting any AI tool into your business practices, assess the provider’s security practices and capabilities. Choose AI vendors and programs that prioritize security and demonstrate a commitment to safeguarding user data. Questions to consider include:

- How does the program protect user inputs and outputs?

- Does the program/vendor offer transparency about how data is processed and stored?

- What safeguards are in place to prevent unauthorized access to the program?

- Does the vendor conduct regular vulnerability testing?

5. Establish Clear Data Governance Practices

Data governance ensures that sensitive information is handled securely across the organization. By implementing strong data governance, companies can significantly reduce their exposure to risks associated with AI. When it comes to tools like ChatGPT, businesses should:

- Prohibit Sensitive Data Uploads: Block employees from inputting confidential documents, proprietary strategies, or customer information into AI systems.

- Use Access Controls: Limit who can use ChatGPT within the organization, granting access only to those who need it for their roles.

- Monitor Usage Logs: Regularly review ChatGPT usage to identify potential misuse or breaches of policy.

Balance Innovation and Security in AI Usage with Blade Technologies

AI tools like ChatGPT can revolutionize how businesses operate, streamlining workflows, enhancing productivity, and fostering innovation. However, as with any powerful technology, these tools come with inherent risks that cannot be ignored. Vulnerabilities that allow hackers to exploit ChatGPT’s memory feature serve as a stark reminder that even cutting-edge technologies are not immune to cyber threats.

For businesses, the stakes are particularly high. The possibility of sensitive information being exposed to malicious actors underscores the urgent need to implement robust security measures, and at Blade Technologies, we understand the evolving threat landscape and can help you stay one step ahead. Our network monitoring solutions and cybersecurity expertise can help your business detect and neutralize threats before they cause harm. We can also help you create a clear framework for AI tool usage to ensure your employees are empowered to use these technologies safely and effectively.

By partnering with Blade Technologies and acting now, you can harness the power of AI while safeguarding your data, reputation, and operations. Reach out to Blade Technologies today to learn how we can help secure your business in an increasingly AI-powered world.

Contact Us