Mar 3, 2025

Microsoft has officially announced that Copilot, its AI-powered assistant, will be integrated into all Microsoft 365 applications, making it a mandatory feature for users. Alongside this sweeping AI integration, Microsoft is also implementing a 30% price increase across all Microsoft 365 subscription plans, a move that has drawn widespread criticism from businesses and individual users alike.

While Microsoft markets Copilot as a productivity-boosting tool capable of drafting emails, generating reports, and streamlining workflows, cybersecurity experts and IT professionals are raising concerns about its potential risks. Unlike traditional AI assistants, Copilot operates as an advanced tracking tool, recording keystrokes and screen activity—an approach that has alarming similarities to keyloggers and spyware. If compromised, Copilot could expose a massive amount of sensitive business data, making it a prime target for hackers. Beyond privacy risks, many users are outraged at Microsoft’s decision to enforce Copilot’s integration across its entire suite of Office apps, leaving businesses with little choice but to adopt the tool—or seek alternative productivity platforms. The 30% price increase further compounds frustrations, particularly for small and medium-sized businesses (SMBs) that rely on Microsoft 365 for essential operations.

In this article, the cybersecurity experts at Blade Technologies will break down what Copilot is, its potential benefits for businesses and workflow automation, the major security risks it introduces, the controversy surrounding Microsoft’s announcement, and cost-effective alternatives for those looking to switch. Continue reading to explore all the essential information surrounding Copilot and Microsoft 365.

What is Microsoft Copilot?

Microsoft Copilot is being marketed as a powerful AI assistant designed to enhance productivity across all Microsoft 365 applications. Built on OpenAI’s GPT-4 technology, Copilot is intended to help users draft documents, summarize emails, analyze data, and automate workflows within apps like Word, Excel, Outlook, Teams, and PowerPoint. However, its forced integration into Microsoft 365, coupled with its extensive data-tracking capabilities, has sparked serious privacy and security concerns.

Despite the controversy, Copilot does offer several advantages for businesses looking to streamline their workflows:

- Boosts Productivity: By automating repetitive tasks, Copilot can help reduce manual workload and improve efficiency in document creation, data analysis, and communication.

- Enhances Collaboration: Copilot can summarize emails, generate reports, and provide meeting notes, allowing teams to stay aligned without sifting through endless messages and documents.

- AI-Assisted Decision-Making: With machine learning-powered insights, businesses can quickly analyze large datasets, detect trends, and make informed decisions using Excel.

However, while Microsoft is promoting Copilot as a game-changer for workplace efficiency, it’s not highlighting the risks associated with its data tracking capabilities. Unlike traditional AI assistants, Copilot continuously monitors user activity, recording keystrokes, screen interactions, and application usage.

Security Concerns Surrounding Copilot

Microsoft Copilot may promise enhanced productivity and automation, but its built-in data collection capabilities make it a cybersecurity nightmare. With its ability to monitor keystrokes, track screen activity, and analyze user behavior, Copilot poses a serious risk if exploited by cybercriminals, misused by Microsoft, or mishandled by businesses.

1. Data Collection and Privacy Issues

One of the biggest concerns surrounding Copilot is its invasive data tracking. Microsoft has designed Copilot to function as a real-time assistant, which means it must continuously process and analyze user activity. This data-driven approach raises red flags for businesses, privacy advocates, and security experts.

- Always-On Monitoring: Copilot tracks user inputs, documents, and interactions within Microsoft 365 apps. This continuous surveillance could inadvertently capture sensitive information, including financial records, legal documents, and confidential business communications.

- Keystroke Logging & Screen Recording: Although Microsoft does not explicitly describe Copilot as a keylogger, its ability to track user input and application usage makes it functionally identical to screen recording and keylogging software. If compromised, this data could be exposed to cybercriminals.

- Cloud-Based Data Storage: Copilot relies on Microsoft’s cloud infrastructure, meaning everything it processes is stored and analyzed remotely. If Microsoft’s servers are breached, misconfigured, or exploited, massive amounts of sensitive data could be leaked.

- Microsoft’s History of Data Privacy Issues: Microsoft has faced multiple legal challenges over data privacy, including concerns over Windows telemetry tracking and Office 365 data collection. With Copilot integrated into every Office app, the risk of unauthorized data access and privacy violations increases exponentially.

2. Vulnerability to Exploitation

Copilot’s massive data collection capabilities make it a goldmine for hackers. If cybercriminals manage to breach Microsoft’s servers or exploit vulnerabilities within Copilot’s AI system, the consequences could be devastating.

- Target for Cyber Attacks: Copilot’s centralized data storage makes it an attractive single point of failure. If compromised, attackers could gain access to company trade secrets, client and employee personal data, financial records and contracts, and even healthcare and legal documents.

- AI-Powered Phishing Attacks: Hackers could manipulate Copilot’s AI to generate highly convincing phishing emails, fake invoices, or fraudulent business messages, increasing the likelihood of successful cyber scams.

- Malicious AI Jailbreaking: Just like other AI models, Copilot is vulnerable to jailbreaking, where bad actors trick the AI into bypassing ethical constraints. If compromised, Copilot could be manipulated to execute harmful commands, leak confidential data, or aid in social engineering attacks.

3. User Control and Transparency

Another major concern is that Microsoft has not provided clear options for users to disable Copilot across all Microsoft 365 apps. Currently, Copilot will be a forced integration across all Office applications that you cannot turn off or opt out of. This means that businesses that do not want Copilot to track their activity have no way to fully disable it, raising concerns about forced compliance.

Microsoft has also not been fully transparent about where Copilot data is stored, how long it is retained, and who has access to it. Privacy laws like GDPR (Europe) and CCPA (California) require companies to give users control over their data, and by forcing Copilot into Microsoft 365 applications, Microsoft may face legal scrutiny over data collection without explicit consent.

Public Backlash and Controversy on the Copilot Microsoft 365 Integration

Microsoft’s decision to force the integration of Copilot across all Microsoft 365 applications has sparked widespread frustration among businesses, IT professionals, and everyday users. Many feel that Microsoft is pushing AI adoption without giving users a choice, raising concerns about privacy, security, and control over their own data. Unlike other AI tools that users can opt into, Copilot is baked into the core functionality of Microsoft Office apps, making it impossible to ignore.

Beyond privacy concerns, the 30% price increase for Microsoft 365 subscriptions has only fueled the backlash. Many businesses, especially small and medium-sized enterprises (SMBs), rely on Microsoft Office for essential operations and see this price hike as an unnecessary financial burden. The timing of the increase—coupled with the forced AI integration—has led to accusations that Microsoft is forcing users to pay more for features they may not want or trust. Some businesses are now actively exploring alternative productivity tools to avoid both higher costs and AI-related security risks.

Despite Microsoft’s claims that Copilot will enhance productivity and improve workflows, the reality is that many businesses feel backed into a corner. With no clear opt-out mechanism and an unavoidable price increase, Microsoft has left customers with a difficult choice: accept Copilot’s security risks and higher costs or look for an alternative productivity suite altogether.

What Does the Copilot Integration Mean for Businesses?

Microsoft’s forced integration of Copilot, combined with a 30% price increase, presents serious challenges for businesses—particularly small and medium-sized enterprises (SMBs) that rely on Microsoft 365 for daily operations. Beyond the financial strain, businesses must now assess how Copilot's security risks impact their data privacy and compliance requirements. For industries that handle sensitive information, such as finance, healthcare, and legal services, Copilot’s constant data tracking could pose a regulatory nightmare if not properly managed.

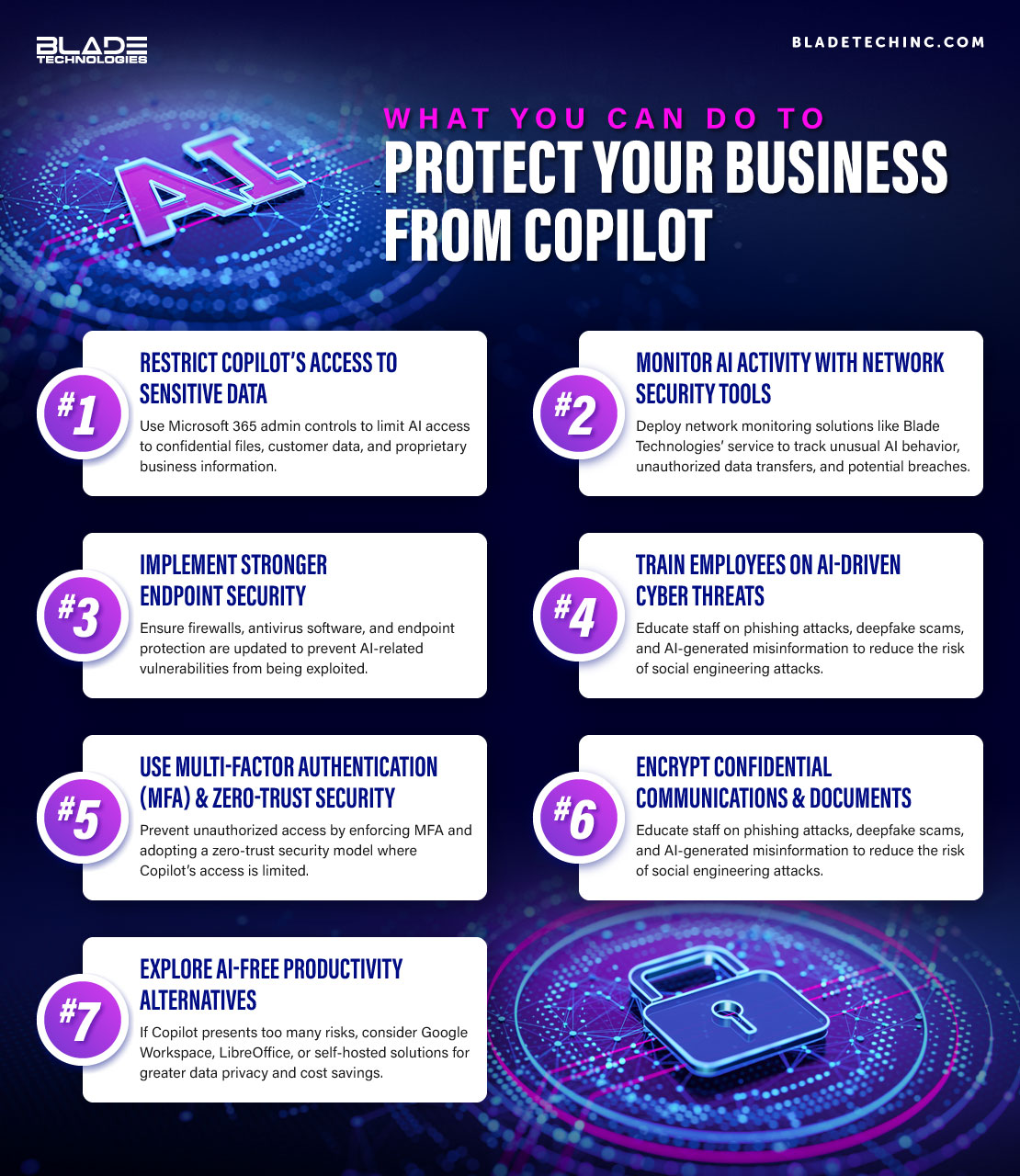

Companies must take immediate action to protect themselves from potential data leaks, AI manipulation, and cybersecurity vulnerabilities introduced by Copilot. Here are a few essential practices to follow:

- Adjust Settings: IT professionals and cybersecurity team members should review and adjust Microsoft 365 security settings to limit Copilot’s access to sensitive information.

- Regular Audits: Completing regular risk assessments and using AI monitoring tools can help detect unauthorized data usage and prevent AI-generated phishing or misinformation attacks.

- Educate Your Staff: Teach your employees the potential risks associated with Copilot and how to recognize AI-driven security threats.

The financial burden of Microsoft’s price hike is another major concern, particularly for businesses with large teams that require multiple Microsoft 365 licenses. A 30% increase across all subscription tiers adds up quickly, forcing businesses to reconsider whether Microsoft 365 is still the best choice for their productivity needs. For businesses that choose to remain with Microsoft, stronger cybersecurity measures and access controls will be necessary to mitigate the risks associated with Copilot.

Alternatives to Microsoft 365

While Microsoft 365 has long been the dominant office suite, there are several competitive alternatives that provide businesses with greater data privacy, flexibility, and cost savings. The following tools offer similar functionality to Microsoft 365 without intrusive AI tracking or steep price increases.

Google Workspace

One of the most well-known alternatives is Google Workspace, which includes Gmail, Google Docs, Sheets, Slides, and Meet. Google Workspace provides cloud-based collaboration tools with strong security controls, and while it also incorporates AI features, users have more control over how and when AI is used. Additionally, Zoho Office Suite is another viable alternative, offering word processing, spreadsheets, and email services at a lower cost than Microsoft 365, with a strong emphasis on data security and privacy.

LibreOffice

For businesses seeking an open-source solution, LibreOffice is a powerful and completely free alternative that supports Word, Excel, and PowerPoint file formats without requiring an internet connection or subscription fees. It provides an Office-like experience with advanced document editing features and on-site hosting options for companies concerned about cloud-based data security.

Nextcloud Office

Companies looking for enhanced privacy protections may consider Nextcloud Office, which allows organizations to host their own private cloud-based office suite, ensuring complete control over sensitive business data. Only rendered content is sent to the browser to minimize the chance of leaks, and it works with all major documents, spreadsheets, and presentation file formats.

Protect Your Business from AI-Driven Threats with Blade Technologies

Microsoft’s forced integration of Copilot into every Microsoft 365 app, coupled with a steep 30% price increase, has left businesses with serious concerns about privacy, security, and cost-effectiveness. While AI-powered tools like Copilot offer productivity benefits, they also introduce significant risks, including continuous data tracking, keylogging capabilities, and vulnerabilities that hackers could exploit. With no clear opt-out option, businesses are now forced to navigate AI-driven changes they may not want or trust.

For businesses that must continue using Microsoft 365, strong security measures are essential. At Blade Technologies, we understand that new AI-driven tools introduce both opportunities and risks. Our network monitoring service helps businesses identify security vulnerabilities, track suspicious activity, and ensure compliance with best practices—especially when dealing with AI-powered software like Microsoft Copilot. If your business is concerned about data privacy, unauthorized tracking, or cyber threats, our experts can help you secure your systems, monitor for breaches, and protect your sensitive information.

Don’t let AI-driven changes put your business at risk. Contact Blade Technologies today to learn how our network security solutions can help you stay protected in an AI-powered world.

Contact Us